kvm and libvirtd networking cheat sheet

1 kvm Kernel-based Virtual Machine

Virtualization solution for Linux kernels since Linux kernel 2.6.5 on

x86 hardware. It uses the loadable linux kernel module, kvm.ko. Both Intel,

kvm-intel.ko and AMD, kvm-amd.ko, are supported.

It allows the kernel itself act as a hypervisor, and with Intel VT-x or, AMD-V

it also allows nested hypervisors. That means a guest vm, could itself

run a hypervisor, and have guests of its own.

2 install kvm on CentOS8

2.1 Verify CPU support of Intel VT or AMD-V

Step 1: Verify your CPU support for Intel VT or AMD-V

Virtualization extensions. In some systems, this is disabled on BIOS and you

may need to enable it.

cat /proc/cpuinfo | egrep "vmx|svm"

You can also do the same with lscpu command

lscpu | grep Virtualization

Virtualization: VT-x

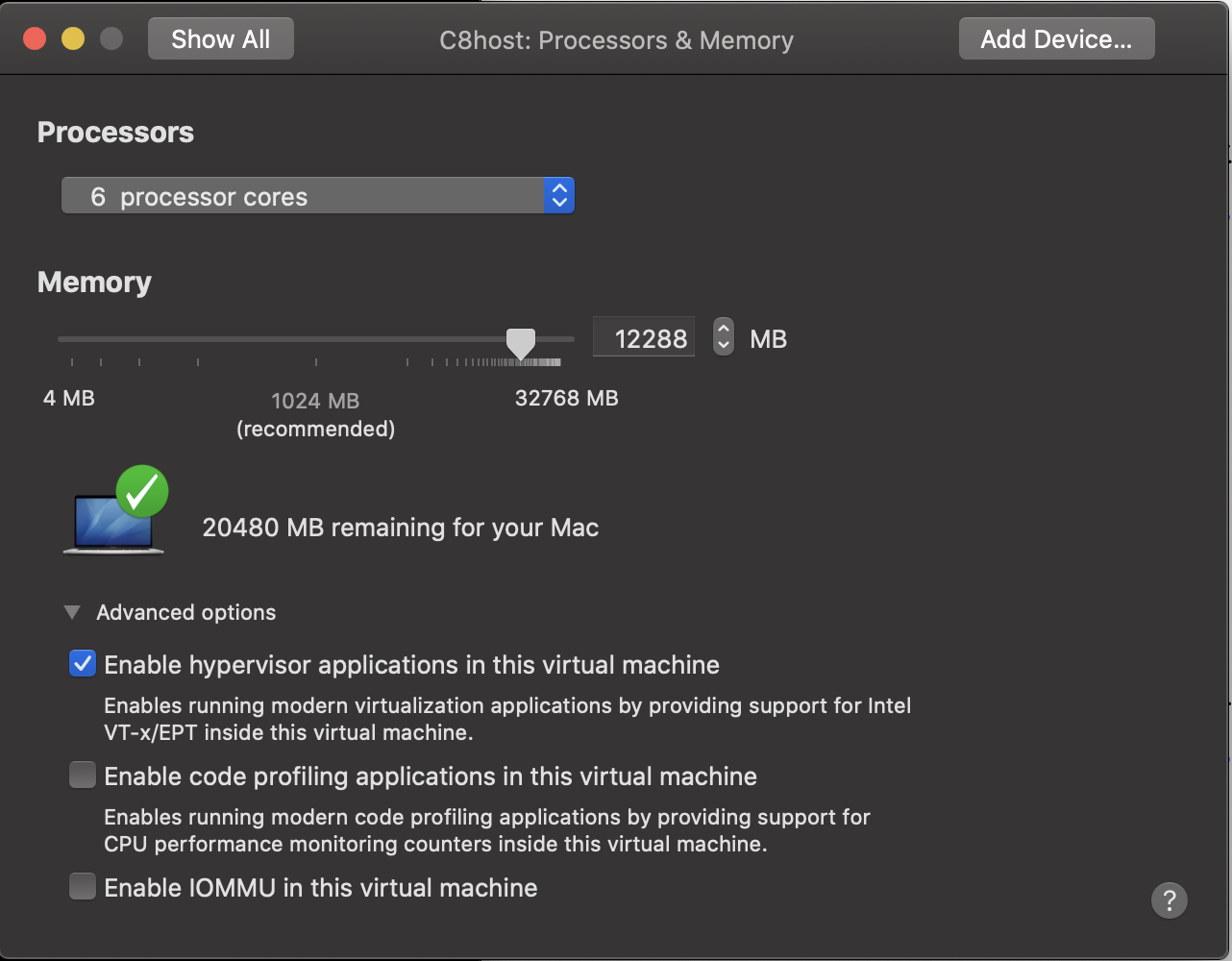

2.2 Fix for my VMWare fusion CentOS8

I was not seeing any output on "grep -E '(vmx|svm)' /proc/cpuinfo" so I suspected that the fusion settings on my CentOS8 host did not support hardware virtualization.

I was right:

2.3 Result:

I was now getting the responses I was looking for. For example:

modprobe -a kvm_intel ls -l /sys/module/kvm/ ls -l /sys/module/kvm_intel/ cat /sys/module/kvm_intel/parameters/nested

2.4 For details on modprobe, see my own notes in linux.org

Step 2: ummmmm??? What happened to step 2 ?

Step 3: Install KVM / QEMU on RHEL/ CentOS 8

KVM packages are distributed on RHEL 8 via AppStream repository. Install KVM

on your RHEL 8 server by running the following commands:

sudo dnf upgrade# like sudo yum update but better and newer.sudo dnf install @virt

After installation, verify that Kernel modules are loaded

$ lsmod | grep kvm kvm_intel 233472 0 kvm 737280 1 kvm_intel

3 libvirtd and manual/custom config of netorking.

With the installation of libvirtd and its services create a virtual bridge interface virbr0 with network 192.168.122.0/24. In your setup there might be requirements to use a different network. We will tune the virbr0 and eth1 ( eth1 is the base interface for virbr0).

Update the interface configuration files as below

/etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet BOOTPROTO=none NAME=eth1 ONBOOT=yes BRIDGE=virbr0 HWADDR=00:0c:29:41:15:0a

and

cat /etc/sysconfig/network-scripts/ifcfg-virbr0

TYPE=BRIDGE DEVICE=virbr0 BOOTPROTO=none ONBOOT=yes IPADDR=192.168.1.10 NETMASK=255.255.255.0 GATEWAY=192.168.1.1

Note – Replace the IP Address / MAC/UUID Info with the appropriate in your setup.

Enable the IPv4 forwarding

# echo net.ipv4.ip_forward = 1 | tee /usr/lib/sysctl.d/60-libvirtd.conf # /sbin/sysctl -p /usr/lib/sysctl.d/60-libvirtd.conf

Configure Firewall

firewall-cmd --permanent --direct --passthrough ipv4 -I FORWARD -i bridge0 -j ACCEPT firewall-cmd --permanent --direct --passthrough ipv4 -I FORWARD -o bridge0 -j ACCEPT firewall-cmd --reload

4 Overview of Virtualization Components in Linux

virt-manager- GUI tool

- can use QEMU and/or can use KVM as its hypervisor

kvm(the actual hypervisor)qemuThe emulator that emulates VMs (pronounced keemu &/or queue-emu)virt-viewervirsh(the virtualization shell)- and a bunch of libraries…

See virt-tools.org for a good overview of what is what w.r.t. virtualization

5 Command Line control of VMM using virsh

virsh, or 'virtual shell' can be executed with command line arguments

as described below. i.e. sudo virsh list --all However you can also enter

a virsh shell, after which the command would simply be list --all.

Enter the shell with sudo virsh

5.1 virsh

sudo virsh list # will only show active VMs sudo virsh list --all sudo virsh list --inactive sudo virsh start vm1 sudo virsh edit vm1 # where vm1 is your vm's name

5.1.1 virsh commands for network (the KVM internal virutal network that is)

The virtual network used by your VM may not be running. If so, you will get errors such as "Failed to add tap interface 'vnet%d' to bridge 'virbr0' No such file or directory" This is usually the 'default' network, which can be started with:

virsh net-start default # to start the virtual default virtual network. virsh net-start default # to start the virtual default virtual network. virsh net-destroy # note net-stop is NOT a correct command virsh net-destroy sudo virsh net-edit OPS335-nat-dhcp sudo virsh net-info <network> # obvious sudo virsh net-list --inactive sudo virsh net-list --all sudo virsh net-list --autostart sudo virsh net-list --persistent sudo virsh net-list --transient sudo virsh net-list --name # changes output so names are displayed? sudo virsh net-undefine <network> # undefine a config for a persistent net # if net is active, make it transient sudo virsh net-update # read man pages on how to make changes to live net sudo virsh net-dhcp-leases <network> [mac]

net-dhcp-leases gets a list of dhcp leases for all net interfaces connected to the

given virtual net or limited the output for one int if mac addr is specified

virsh dumpxml $your-vm-name | grep acpi virsh dumpxml $your-vm-name | grep acpi virsh dumpxml $your-vm-name > ~/backups/saved-your-vm-name

5.2 xml stored in /var/lib/libvirt/images? follow up with this.

Other than using the above "dumpxml" command to create a xml file wherever

you want to put it, kvm itself stores its production xml from some directory.

Investigate which one…

5.3 virsh commands to get infomation

sudo virsh info? sudo virsh dominfo vm1 sudo virsh dominfo vm1 | grep mem # result is in KiB

5.4 virsh commands to add more memory

sudo virsh setmaxmem vm1 --size 16777216 sudo virsh setmaxmem vm1 --size 16777216 --live # or --current sudo virsh setmem vm1 --size 8388608 --config sudo virsh setmem vm1 --size 8M --config sudo virsh setmem vm1 --size 8G --config

or use virsh edit vm1

when on vm1: free -m

5.5 KiB vs KB

Good to know specifics when sizing a VM and the difference between KiB and KB.

KiB is the larger, while KB is a power of 10

1 KB = 1000 bytes (MAC OSx and Linux)

1 KiB = 1024 bytes (universal) 2^10

Megabytes

1 MiB = 1,048,576 bytes 2^20

Gigabytes

1 GB = 1,000,000,000 bytes

1 GiB = 1,073,741,824 bytes 2^30

; almost 74 Megabytes larger.

Terabytes

1 TiB = 1,099,511,627,776 bytes 2^40

Petabytes

PiB = 1,125,899,906,842,624 2^50

Exabytes

EiB = 1,152,921,504,606,847,000 2^60

Zettabytes ZiB 2^70

Yottabytes YiB 2^80

5.6 virsh add virtual CPU

sudo virsh edit vm1 then look for <vcpu placement='static'>4</vcpu> sudo virsh dominfo vm1 | grep -i cpu

5.7 virsh to add virtual disk to a VM

There are two steps involved in creating and attaching a new storage device to Linux KVM guest VM:

Details from the two steps:

5.7.1 Create a disk image file using qemu-img create command

In the following example, we are creating a virtual disk image with 7GB of

size. The disk images are typically located under /var/lib/libvirt/images/

directory. The command to use is qemu-img create

cd /var/lib/libvirt/images/

qemu-img create -f raw myRHELVM1-disk2.img 7G

See man qemu-img for details but the above example will create and image that

- has a size=7516192768

- a format of raw

- an image name 'myRHELVM1-disk2.img'

5.7.2 Attach the virtual disk image to the VM

To attach the newly created disk image, use the virsh attach-disk command

as shown below:

virsh attach-disk myRHELVM1 \

--source /var/lib/libvirt/images/myRHELVM1-disk2.img \

--target vdb --persistent

# If no errors, you will see: "Disk attached successfully"

The above virsh attach-disk command has the following parameters:

myRHELVM1Thenameof the VM

– source The full path of the source disk image. This is the one that we

created using qemu-image command above. i.e: myRHELVM1-disk2.img

– target This is the device mount point. In this example, we want to attach

the given disk image as /dev/vdb. Please note that we don’t really need to

specify /dev. It is enough if you just specify vdb.

– persistent indicates that the disk that attached to the VM will

be persistent.

As you see below, the new /dev/vdb is now available on the VM.

using: fdisk -l | grep vd

5.8 virt-clone

To use the virt-clone command line to clone a virtual machine,

- make sure the virtual machine is shutdown

- run virt-clone

→ virt-clone --connect=qemu:///system --original pangaea --name australinea --file /var/lib/libvirt/images/austalinea.qcow2

If your virtual machine target name will match the file name, you can shorten the above to

- run virt-clone (short-cut)

→ virt-clone --connect=qemu:///system --original pangaea --name australinea --auto-clone

5.9 virt-clone to a remote system.

If you’re connection to remote KVM/QEMU Host machine, put url before /system

It will look something like:

→ virt-clone --connect=qemu://192.168.1.30/system --original pangaea --name australinea --file /var/lib/libvirt/images/australinea.qcow2

pangaea:Name of VM from which you are cloning.australinea:Name given to resulting VM after cloningaustralinea.qcow2:!Image saved that australinea boots from.

Check to confirm that australinea.qcow2 file is successfully stored

in /var/lib/libvirt/images folder.

5.10 virt-sysprep

virt-sysprep "resets" or "unconfigures" a virtual machine so that clones can

be made from it. The "unconfiguring" just strips any machine specific

customizations, like ssh keys, static ip addresses, etc, so that a clone will

coexist with the source of the clone.

5.10.1 CentOS-8 EOL seems to be virt-sysprep EOL?

On my Alma-Linux image that was upgraded from CentOS-8, virt-sysprep was no longer found. Not sure what the replacement is as of

In fact on my Alma-Linux VM:

root@c8host /bin[1009]$ ls virt- virt-clone virt-install virt-pki-validate virt-xml virt-host-validate virt-manager virt-viewer virt-xml-validate

Interesting that man virt-clone pages on my Alma-Linux mention virt-sysprep

but the tool simply was not anywhere on my Alma-Linux system. Looks like

a bug.

I also found that I could not install it on Alma either:

sudo dnf install virt-sysprep Last metadata expiration check: 0:02:47 ago on Mon 07 Mar 2022 03:54:18 PM EST. No match for argument: virt-sysprep Error: Unable to find a match: virt-sysprep

5.10.2 For my older CentOS-8 image this is what I ran with virt-sysprep:

virsh suspend ncbz01

virt-clone --original ncbz01 --name ncbz02

--file /var/lib/libvirt/images/ncbz02-disk01.qcow2

virsh resume ncbz01

virt-sysprep -d ncbz02 --hostname ncbz02 --enable

user-account,ssh-hostkeys,net-hostname,net-hwaddr,machine-id

--keep-user-accounts vivek --keep-user-accounts root

--run 'sed -i "s/192.168.122.16/192.168.122.17/"

/etc/network/interfaces'

5.11 qemu-img info

You can run qemu-img info /var/lib/libvirt/images/vm2.qcow2 to get insight

into the kvm image. Good when investigating an error with that image.

5.12 delete a VM

- shutdown the vm

- destroy the VM (and undefine it

- remove the disk image

5.12.1 1) virsh shutdown vm1

5.12.2 2) virsh destroy vm1

virsh undefine vm1

5.12.3 3) rm /var/lib/libvirt/images/vm1-disk1.img

5.12.4 3) rm /var/lib/libvirt/images/vm1-disk2.img

5.13 To disable virbr0, try:

> virsh net-destroy default

> virsh net-undefine default

> service libvirtd restart

> ifconfig

5.14 Behaviour before starting vm

sudo virsh list –all resultining in nothting, no vms active or inactive. This may have changed. I can see all the VMs "shut off" and all the VMs "running"

5.15 virsh console to a vm

virsh console vm1 virsh console –domain vm1 –safe

virsh help console

My issue is that the virtual serial console does NOT seem to have a login prompt. I can change that too. On the guest VM… sudo systemctl enable serial-getty@ttyS0.service sudo systemctl start serial-getty@ttyS0.service

5.15.1 what tty am I on?

tty # how logical is that???? Gotta love unix.

My kvm console is /dev/tty1 My ssh session is /dev/pts0

5.16 exit a virsh console using the ^] escape character.

To exit the virsh console session (not a trivial task), use CTRL-SHIFT ] Is that the same as C-} ????

Nope for me it was actually NOT shifted, i.e. just plain C-]

Can also try CTRL-SHIFT 5 (

Is that the same as C-% ??

5.17 Accessing (starting) VMM from the command line:

virt-manager

5.18 Allowing a tty login on the virsh console:

Once you can virsh console into a vm, say vm2, then you have to also enable that console tty to have a login.

Old way: On the virtual machine, add ‘console=ttyS0‘ at the end of the kernel lines in the /boot/grub2/grub.cfg file:

But grub.cfg should NOT be edited by hand. So the new way:

edit the /etc/default/grub file, add ‘console=ttyS0‘ to the GRUBCMDLINELINUX variable and execute ‘# grub2-mkconfig -o /boot/grub2/grub.cfg‘.

Now, reboot the virtual machine:

Connected to domain vm.example.com Escape character is ^]

Red Hat Enterprise Linux Server 7.0 (Maipo) Kernel 3.10.0-121.el7.x8664 on an x8664

vm login:

5.19 How I recovered / restored a VM from a backed up .qcow2 image

I had deleted the guest VM from VMM completely. I had a backup so I

cp /backup/full/pangaea.qcow2 /var/lib/libvirt/images

That was actually a gzip of the image so I had to run:

cd /var/lib/libvirt/images

gunzip pangaea.qcow2

That resulted in a proper .qcow2 images in the right location, but before I could run it via libvirt, I had to use VMM to import it. vi

- create a new VM

- import existing disk image

- browse to /var/lib/libvirt/images and select the pangaea.qcow2 file

- pick a name for the vm that is different from the image. I used "pan" This I found after twice trying to create the "new" vm as "pangaea" That did not work

- This booted and all the settings of "pangaea" were restored. Only VMM sees the "domain" as "pan" and not "pangaea".

6 KVM guest networking

If the guest VM is running KVM, then run it as bridged mode, so its "outside addapter" gets full LAN connectivity, as just another host on that LAN.

Then VMM guests can be configured using a NATed "bridge" network, say virbr0, on a separate ip subnet, say 192.168.111.0/24. All guests get a 111.x addr and can see each other, but when then talk to the outside, they get NAT'ed to the Fusion's guest VM's network, and all appear on the LAN as that guest host.

7 VMM on a KVM guest VM

7.1 Finding ip addr of KVM guest VM from the host

On a terminal session on the KVM host, type

virsh net-list

virsh net-dhcp-leases <networkname>

To delete a KVM guest use:

virsh list

virsh shutdown VM

virsh undefine VM

7.2 KVM is both a Type-1 and Type-2 Hypervisor

KVM is not a clear case as it could be categorized as either one. The KVM kernel module turns Linux kernel into a type 1 bare-metal hypervisor, while the overall system could be categorized to type 2 because the host OS is still fully functional and the other VM's are standard Linux processes from its perspective.

8 libvirt Networking

This from: wiki.libvirt.org

8.1 Networking

What is libvirt doing with iptables?

By default, libvirt provides a virtual network named 'default' which acts as a

NAT router for virtual machines, routing traffic to the network connected to

your host machine. This functionality uses iptables.

For more info, see: https://wiki.libvirt.org/page/VirtualNetworking in particular the section on NAT forwarding.

- How can I make libvirt stop using iptables?

WARNING: Any VMs already configured to use these virtual networks will need

to be edited: simply removing the <interface> devices via 'virsh edit' should

be sufficient. Not doing this step will cause starting to fail, and even

after the editing step the VMs will not have network access.

You can remove all libvirt virtual networks on the machine:

Use 'virsh net-list --all' to see a list of all virtual networks

Use 'virsh net-destroy $net-name' to shutdown each running network

Use 'virsh net-undefine $net-name' to permanently remove the network

Why doesn't libvirt just auto configure a regular network bridge?

While this would be nice, it is difficult/impossible to do in a safe way that won't hit a lot of trouble with non trivial networking configurations. A static bridge is also not compatible with a laptop mode of networking, switching between wireless and wired. Static bridges also do not play well with NetworkManager as of this writing (Feb 2010).

You can find more info about the motivation virtual networks here: http://www.gnome.org/~markmc/virtual-networking.html

How do I manually configure a network bridge? See: Setting up a Host Bridge

How do I get my VM to use an existing network bridge? See: Configuring Guests to use a Host Bridge

How do I forward incoming connections to a guest that is connected via a NATed virtual network? = See: Forwarding Incoming Connections

8.2 Setting up RHEL using NetworkManager

This section for info purposes for now. (I did not use this for CentOS kvm configuration and setup.) This outlines how to setup briding using standard network initscripts and systemctl.

Using NetworkManager directly If your distro was released some time after 2015 and uses NetworkManager, it likely supports bridging natively. See these instructions for creating a bridge directly with NetworkManager:

Using nm-connection-editor UI:happyassassin.net

Using the command line:lukas sapletai blog on libvirt with bridge

8.3 Disabling NetworkManager

(for older distros. Otherwise leave it running. It is good.)

If your distro was released before 2015, the NetworkManager version likely

does not handle bridging, so it is necessary to use "classic" network

initscripts for the bridge, and to explicitly mark them as independent from

NetworkManager (the "NMCONTROLLED=no" lines in the scripts below).

If desired, you can also completely disable NetworkManager:

Creating network initscripts

In the /etc/sysconfig/network-scripts directory it is neccessary to create

2 config files. The first (ifcfg-eth0) defines your physical network

interface, and says that it will be part of a bridge:

# cat > ifcfg-eth0 <<EOF DEVICE=eth0 HWADDR=00:16:76:D6:C9:45 ONBOOT=yes BRIDGE=br0 NM_CONTROLLED=no EOF

Obviously change the HWADDR to match your actual NIC's address. You may also wish to configure the device's MTU here using e.g. MTU=9000.

The second config file (ifcfg-br0) defines the bridge device:

# cat > ifcfg-br0 <<EOF DEVICE=br0 TYPE=Bridge BOOTPROTO=dhcp ONBOOT=yes DELAY=0 NM_CONTROLLED=no EOF

WARNING: The line TYPE=Bridge is case-sensitive - it must have

uppercase 'B' and lower case 'ridge'

After changing this restart networking (or simply reboot)

The final step is to disable netfilter on the bridge:

# cat >> /etc/sysctl.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0 EOF # sysctl -p /etc/sysctl.conf

It is recommended to do this for performance and security reasons. See Fedora bug #512206. Alternatively you can configure iptables to allow all traffic to be forwarded across the bridge:

You should now have a "shared physical device", to which guests can be attached and have full LAN access

# brctl show bridge name bridge id STP enabled interfaces virbr0 8000.000000000000 yes br0 8000.000e0cb30550 yes eth0

Note how this bridge is completely independant of the virbr0. Do NOT

attempt to attach a physical device to 'virbr0' - this is only for NAT

connectivity

9 Recommendations in CentOS6 and CentOS7 using NetworkManager

This is for CentOS to prep and mod the KVM networking. For KVM's own internal virtual networking, see the section above called virsh commands for network

This from zapletalovi.com

yum -y install bridge-utils yum -y groupinstall "Virtualization Tools" export MAIN_CONN=enp8s0 bash -x <<EOS systemctl stop libvirtd nmcli c delete "$MAIN_CONN" nmcli c delete "Wired connection 1" nmcli c add type bridge ifname br0 autoconnect yes con-name br0 stp off #nmcli c modify br0 ipv4.addresses 192.168.1.99/24 ipv4.method manual #nmcli c modify br0 ipv4.gateway 192.168.1.1 #nmcli c modify br0 ipv4.dns 192.168.1.1 nmcli c add type bridge-slave autoconnect yes con-name "$MAIN_CONN" ifname "$MAIN_CONN" master br0 systemctl restart NetworkManager systemctl start libvirtd systemctl enable libvirtd echo "net.ipv4.ip_forward = 1" | sudo tee /etc/sysctl.d/99-ipforward.conf sysctl -p /etc/sysctl.d/99-ipforward.conf EOS

10 libvirt config files:

/etc/libvirt/qemu/networks ( but do not edit these. They are controlled by kvm. If you want to make changes, use sudo virsh net-edit <name>

However, I found that the only way I could rename a network was to edit the files here… very carefully.

- I had to virsh edit vm1 on all the vms I had, to manually match the new network name.

- I had to rename the actual network file name too in /etc/libvirt/qemu/networks

10.0.1 See also: /org/virt-manager/virt-manager

for client information in ?dconf?

10.0.2 virt-manager config files

are in ~/.gconf/apps/virt-manager/

10.0.3

11 Changing vm1 from dhcp leased address to static using nmcli

The following instructions are for a subnet 192.168.111.0 Replace 111 with the last two digits of your student ID.

cd /etc/sysconfig/network-scripts

sudo cp ifcfg-ens3 ifcfg-ens34.original

cat ifcfg-ens3

ip -4 addr

nmcli connection

nmcli device

nmcli connection modify Wired\ connection\ 1 connection.id ens3

nmcli connection modify ens3 ipv4.addr "192.168.111.12"

cat ifcfg-Wired-connection_1

nmcli connection modify ens3 ipv4.gateway 192.168.111.1

ip -4 addr

nmcli connection modify ens3 ipv4.dns 192.168.111.1

nmcli connection modify ens3 ipv4.method manual

cat ifcfg-Wired_connection_1

nmcli c mod ens3 ipv4.dns 192.168.111.1 # let c8host resolve for guest VMs

vir nmcli connection

nmcli connection delete eth0

nmcli device

vi ls -lt

ip -4 addr

nmcli network off

nmcli network on

ip -4 addr

ping -c 3 -n cbc.ca

12 Changing ip address of virbr0 interface

Usually done through the GUI of VMM, but this may come in handy someday: circa 2015: If you change the virbr0 address manually, you may find it hasn't actually taken effect, even after a reboot.

That is becuase the proper way to change an ip address for virbr0 is through using virsh.

virsh net-destroy default # to stop the network virsh net-start default # to start it virsh net-edit default # to edit the network settings.

Note to self: I have NOT tried this as of Jan 2020.

13 Manually config KVM bridge networking

From access.redhat.com: For anyone interested in KVM bridge networking, it's quite simple if you follow Jamie's advices plus this couple of things:

- Stop Network Manager: service NetworkManager stop

- Disable Network Manager on boot: chkconfig NetworkManager off

- Create /etc/sysconfig/network-scripts/ifcfg-br0 (or whatever your interface name is)

- ifcfg-br0 file (this is for using DHCP, otherwise BOOTPROTO=none and

add IPADDR=, MASK=, GATEWAY=):

DEVICE br0 ONBOOT=yes TYPE=Bridge BOOTPROTO=dhcp STP=on DELAY=0 NM_CONTROLLED=no

One important thing is to note that TYPE parameter is case-sensitive. So

Bridge != bridge != BRIDGE. Be damn sure you type Bridge.

Another important thing is to prevent Network Manager from managing this interface by setting NMCONTROLLED param to "no". Although NM is disabled on boot, perhaps you need to enable it again for some other needs in the future.

- Modify /etc/sysconfig/network-scripts/ifcfg-eth0 to match your environment:

DEVICE eth0 HWADDR= UUID= ONBOOT=yes TYPE=Ethernet BOOTPROTO=dhcp BRIDGE=br0 NM_CONTROLLED=no

Considerations about TYPE and NMCONTROLLED params also apply.

Same thing on br1 and eth1 interfaces.

So, basically, MAC address is specified on the eth interface, IP address is specified on the bridge interface. Network Manager not managing those interfaces.

14 Resizing a guest disk space in KVM

14.1 qemu-img

The steps here utilize the kvm utility qemu-img, from the host linux server.

Shutdown the guest VM, in my example it is australinea. Then modify the KVM

settings to allocate more disk space to the guest VM.

14.2 First allocate disk space to the guest VM

Get info on the qcow2 disk image with the command:

qemu-img info /var/lib/libvirt/images/australinea.qcow2

zintis@c8host ~[1004]$

sudo qemu-img info /var/lib/libvirt/images/australinea.qcow2

image: /var/lib/libvirt/images/australinea.qcow2

file format: qcow2

virtual size: 5 GiB (5368709120 bytes)

disk size: 3.91 GiB

cluster_size: 65536

Format specific information:

compat: 1.1

lazy refcounts: true

refcount bits: 16

corrupt: false

zintis@c8host ~[1005]$

Notice that we have a virtual size of 5G, but a disk size of only 3.91G

virtual size is the size of the virutal disk when creating or expanding

the disk. The maximum size here is 5G. The disk size is the current size

of the qcow2 file. How much is actually used by the host to store this vm.

We will be resizing the virtual size, adding 2 GB of space:

qemu-img resize /var/lib/libvirt/images/australinea.qcow2 +2G

sudo qemu-img resize /var/lib/libvirt/images/australinea.qcow2 +2G Image resized. zintis@c8host ~[1006]$

Check the new virtual size is recognized by using the info command again:

qemu-img info /var/lib/libvirt/images/australinea.qcow2

sudo qemu-img info /var/lib/libvirt/images/australinea.qcow2

image: /var/lib/libvirt/images/australinea.qcow2

file format: qcow2

virtual size: 7 GiB (7516192768 bytes)

disk size: 3.91 GiB

cluster_size: 65536

Format specific information:

compat: 1.1

lazy refcounts: true

refcount bits: 16

corrupt: false

zintis@c8host ~[1007]$

You should now see the additional space as far as qemu-img is concerned.

Ensure that there is no snapshots for this guest, because the next command won't work if you have snapshots:

virsh snapshot-list australinea

sudo virsh snapshot-list australinea Name Creation Time State ------------------------------- zintis@c8host ~[1008]$

I had none.

Finally, list the fdisk now visible to the guest:

sudo fdisk -l /var/lib/libvirt/images/australinea.qcow2

sudo fdisk -l /var/lib/libvirt/images/australinea.qcow2 Disk /var/lib/libvirt/images/australinea.qcow2: 3.9 GiB, 4197646336 bytes, 8198528 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes zintis@c8host ~[1009]$

Notice that fdisk still shows the original size, 3.9 GiB in my case.

14.3 Second grow your partition to use this extra space on the guest VM

14.3.1 boot and check disk size has room to expand

a

Boot the guest, and login, and use sudo (root privileges) for these commands:

Using lsblk should show you that vda has now grown from 5G to 7G in size.

lsblklsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTf sr0 11:0 1 1024M 0 rom vda 252:0 0 7G 0 disk ├─vda1 252:1 0 1G 0 part /boot └─vda2 252:2 0 4G 0 part ├─cl-root 253:0 0 3.5G 0 lvm / └─cl-swap 253:1 0 512M 0 lvm [SWAP]

But the logical volume

(lvm)for / has not changed yet.df -h

df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 388M 0 388M 0% /dev tmpfs 405M 0 405M 0% /dev/shm tmpfs 405M 5.6M 400M 2% /run tmpfs 405M 0 405M 0% /sys/fs/cgroup /dev/mapper/cl-root 3.4G 3.1G 101M 97% / /dev/vda1 976M 211M 699M 24% /boot tmpfs 81M 0 81M 0% /run/user/1000

So we have to repartition your

/dev/vdaso thatvda2gets touse the extra2 GB of spacewe added to this guest. For this CentOS8 guest, I downloaded and installed growpart. The package is calledcloud-utils-growpart

14.3.2 install growpart

If you do not yet have it, download and install growpart. The package is called

cloud-utils-growpart

sudo dnf install cloud-utils-growpartzintis@australinea~[602] $ sudo dnf install cloud-utils-growpart Last metadata expiration check: 1 day, 17:53:16 ago on Thu 02 Dec 2021 08:34:47 PM EST. Dependencies resolved. ================================================================================ Package Architecture Version Repository Size ================================================================================ Installing: cloud-utils-growpart noarch 0.31-3.el8 appstream 32 k Transaction Summary ================================================================================ Install 1 Package Total download size: 32 k Installed size: 63 k Is this ok [y/N]: y Downloading Packages: cloud-utils-growpart-0.31-3.el8.noarch.rpm 13 kB/s | 32 kB 00:02 -------------------------------------------------------------------------------- Total 7.9 kB/s | 32 kB 00:04 Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : cloud-utils-growpart-0.31-3.el8.noarch 1/1 Running scriptlet: cloud-utils-growpart-0.31-3.el8.noarch 1/1 Verifying : cloud-utils-growpart-0.31-3.el8.noarch 1/1 Installed: cloud-utils-growpart-0.31-3.el8.noarch s Complete! zintis@australinea~[603] $

Next we have to use the newly downloaded utility, growpart, to grow the parttion. In my case it will be the vba partition. (See

growpart -h) orman growpart, for more details on what growpart can do.

14.3.3 Run growpart

growpart /dev/vda 2zintis@australinea~[605] $ sudo growpart /dev/vda 2 CHANGED: partition=2 start=2099200 old: size=8386560 end=10485760 new: size=12580831 end=14680031

Now lsblk should show that our disk spake on vda2 has increased.

: zintis@australinea~[606] $

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 1024M 0 rom

vda 252:0 0 7G 0 disk

├─vda1 252:1 0 1G 0 part /boot

└─vda2 252:2 0 6G 0 part

├─cl-root 253:0 0 3.5G 0 lvm /

└─cl-swap 253:1 0 512M 0 lvm [SWAP]

zintis@australinea~[607] $

So we are nearly there. vda2 now shows 6G, but the logical volumes, lvm

still show the original 3.5G in space.

14.3.4 Step 3

Resize root logical volume to occupy all space. There are several commands

that are useful here. pvs for physical volume show, This shows what the

physical volume size you have. Then you can use pvresize to resize the

physical volume. Then, lvs for logical volume show. Notice that they both

show which volume group, VG they belong to. You will need that info. In

my case the VG is "cl".

First pvs and pvresize /dev/vda2

zintis@australinea~[608] $ sudo pvs PV VG Fmt Attr PSize PFree /dev/vda2 cl lvm2 a-- <4.00g 0 zintis@australinea~[609] $ sudo pvresize /dev/vda2 Physical volume "/dev/vda2" changed 1 physical volume(s) resized or updated / 0 physical volume(s) not resized zintis@australinea~[610] $ sudo pvs PV VG Fmt Attr PSize PFree /dev/vda2 cl lvm2 a-- <6.00g 2.00g zintis@australinea~[611] $

Notice that now vda2 has 6G of space, with 2G free.

Second lvs to show the logical volume size.

sudo lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert root cl -wi-ao---- <3.50g swap cl -wi-ao---- 512.00m zintis@australi 2nea~[614] $

Notice that still shows 3.5G, not the 6G we have available.

14.3.5 Step 4

Determine the volume group size. Remember in my Centos8 VM, the vg was "cl".

zintis@australinea~[614] $ sudo vgs VG #PV #LV #SN Attr VSize VFree cl 1 2 0 wz--n- <6.00g 2.00g zintis@australinea~[615] $

Also determine the correct Filesystem to extend using the df -hT command. In

my case it was /dev/mapper/cl-root

df -hT

zintis@australinea~[615] $ df -hT Filesystem Type Size Used Avail Use% Mounted on devtmpfs devtmpfs 388M 0 388M 0% /dev tmpfs tmpfs 405M 0 405M 0% /dev/shm tmpfs tmpfs 405M 5.6M 400M 2% /run tmpfs tmpfs 405M 0 405M 0% /sys/fs/cgroup /dev/mapper/cl-root ext4 3.4G 2.7G 568M 83% / /dev/vda1 ext4 976M 211M 699M 24% /boot tmpfs tmpfs 81M 0 81M 0% /run/user/1000 zintis@australinea~[616] $

Notice that / mount point still shows 3.4G size on /dev/mapper/cl-root

14.3.6 Step 5

Then resize logical volume used by the root file system using the extended volume group:

sudo lvextend -r -l +100%FREE /dev/name-of-volume-group/root-lto extend the logical volume size in units of logical extents-rresize underlying filesystem togeter with the logical volume.sudo lvextend -r -l +100%FREE /dev/name-of-volume-group/root

sudo lvextend -r -l +100%FREE /dev/mapper/cl-root Size of logical volume cl/root changed from <3.50 GiB (895 extents) to <5.50 GiB (1407 extents). Logical volume cl/root successfully resized. resize2fs 1.45.6 (20-Mar-2020) Filesystem at /dev/mapper/cl-root is mounted on /; on-line resizing required old_desc_blocks = 1, new_desc_blocks = 1 The filesystem on /dev/mapper/cl-root is now 1440768 (4k) blocks long. zintis@australinea~[617] $ df -hT Filesystem Type Size Used Avail Use% Mounted on devtmpfs devtmpfs 388M 0 388M 0% /dev tmpfs tmpfs 405M 0 405M 0% /dev/shm tmpfs tmpfs 405M 5.6M 400M 2% /run tmpfs tmpfs 405M 0 405M 0% /sys/fs/cgroup /dev/mapper/cl-root ext4 5.4G 2.7G 2.5G 52% / /dev/vda1 ext4 976M 211M 699M 24% /boot tmpfs tmpfs 81M 0 81M 0% /run/user/1000 zintis@australinea~[618] $

Notice that the size went up by 2 GB. It is now 5.4G. That's it.

Well, I did a reboot, but everything worked great.

15 Duplicating the above section using ansible.

See the file ansible.org for detail on ansible. Here I am simply including

the three ansible playbooks I used to duplicate the above steps with my

other four virtual servers.

15.0.1 Part 1 of 3

--- - name: Part 1 of 3 growing volumes by 2G hosts: continents tasks: - name: run lsblk to check partition sizes ansible.builtin.command: lsblk register: LSBLK - debug: msg=:{{LSBLK.stdout}} - name: run df -hT to confirm available disks ansible.builtin.command: df -hT register: DISKFREE - debug: msg=:{{DISKFREE.stdout}} - name: install growpart dnf: name: cloud-utils-growpart state: present become: true - name: Use growpart on vda2 to grow it by 2G ansible.builtin.command: growpart /dev/vda 2 become: true - name: run lsblk ansible.builtin.command: lsblk register: LSBLK - debug: msg=:{{LSBLK.stdout}}

15.0.2 Part 2 of 3

--- - name: Part 2 of 3 growing volumes by 2G hosts: continents tasks: - name: show physical volume size with pvs ansible.builtin.command: pvs become: true register: PVS - debug: msg=:{{PVS.stdout}} - name: resize vda2 physical volume with pvresize ansible.builtin.command: pvresize /dev/vda2 become: true register: RESIZE - debug: msg=:{{RESIZE.stdout}} - name: show physical volume size with pvs ansible.builtin.command: pvs become: true register: PVS - debug: msg=:{{PVS.stdout}}

15.0.3 Part 2 of 3

--- - name: Part 3 of 3 growing volumes by 2G hosts: continents tasks: - name: show logical volume size with lvs ansible.builtin.command: lvs become: true register: LVS - debug: msg=:{{LVS.stdout}} - name: show volume group size with vgs ansible.builtin.command: vgs become: true register: VGS - debug: msg=:{{VGS.stdout}} - name: Extend logical volume cl-root with lvextend ansible.builtin.command: lvextend -r -l +100%FREE /dev/mapper/cl-root become: true register: LVEXT - debug: msg=:{{LVEXT.stdout}} - name: show resulting disk free. ansible.builtin.command: df -hT register: DISKFREE - debug: msg=:{{DISKFREE.stdout}}